I am Sourabh Tiwari, a Robotics Software Engineer specializing in autonomous navigation, computer vision, and sensor fusion. With expertise in ROS2, AI-driven perception, and real-world robotic deployments, I have worked on autonomous delivery robots, drones, industrial forklifts, and mobile robots. I build end-to-end robotics solutions—from centimeter-accurate localization with RTK-GPS, LiDAR, and IMU to advanced perception pipelines using semantic segmentation, depth sensing, and obstacle-aware cost maps. My work spans SLAM-based navigation, AWS-powered teleoperation, and GPU-optimized vision models, delivering scalable, reliable robotics systems.

December 2024 - Present

Dubai, UAE

Experience

At PeykBot, I develop advanced computer vision and navigation systems for autonomous delivery robots, enabling reliable operation in both indoor and outdoor environments. My work includes integrating RGB-depth perception, semantic segmentation, and obstacle-aware cost maps with centimeter-accurate localization using RTK-GPS, LiDAR, and IMU, all within a ROS2-based perception-to-navigation pipeline.

Key Contributions

1. Development of computer vision solutions for autonomous navigation in dynamic environment for

both outdoor and indoors.

2. Integration of traffic sign detection with distance estimation using synchronized RGB and depth data

(Intel RealSense).

3. Implementation of autonomous docking station parking strategies based on visual perception.

4. Developed a centimeter-accurate localization system by integrating RTK-GPS, IMU, and LiDAR

odometry using Unscented Kalman Filter (UKF) in ROS 2.

5. Deployed on a mobile robot with integration of depth sensing, semantic segmentation, and

obstacle-aware cost maps, forming a complete perception-to-navigation pipeline.

June 2023 - november 2024

Bengaluru

Experience

At Control One.AI, I lead the development of autonomous and semi-autonomous vehicles, focusing on cutting-edge technologies to enhance navigation, control, and automation. My work spans both hardware and software integration, with a focus on ROS2, Micro-ROS, and advanced sensor fusion for complex robotics systems.

Key Contributions

1. Autonomous Vehicle Development: Led the design and integration of control systems for autonomous and semi-autonomous vehicles, including forklifts and mobile robots (BOPT), with ROS2-based navigation and sensor fusion.

2. Reactive Navigation & Image Processing: Developed reactive navigation systems using 1280p image processing, 2D/3D object detection, and RTAB-Map SLAM for 3D environmental mapping and obstacle avoidance.

3. Sensor Fusion & Integration: Integrated and fused data from various sensors, including TOF sensors, Lidar, and encoders, to optimize vehicle performance and ensure safe and reliable operation in dynamic environments.

4. AWS-Powered Teleoperation: Implemented AWS-based teleoperation solutions, enabling remote control of vehicles and robots with real-time data streaming and monitoring.

5. PID Control Systems: Utilized PID controllers for precise steering and speed control, enhancing vehicle navigation and stability.

6. CUDA & TensorRT Optimization: Optimized image processing algorithms using CUDA and OpenCV for faster object detection, and integrated TensorRT for efficient inference on NVIDIA hardware.

7. 3D Pallet Detection: Developed a 3D pallet detection model using NVIDIA platforms, enabling precise handling and identification of industrial pallets in autonomous logistics operations.

8. Team Leadership: Managed and led a robotics team, overseeing system design, integration, and automation tasks to meet project deadlines and ensure seamless collaboration across functions.

October 2022 - May 2023

IIT Delhi

Experience

At VECROS, I contributed to the development of autonomous drone systems, focusing on flight control, navigation, and obstacle avoidance. I worked extensively with ArduPilot and MAVLink, ensuring seamless operation across various flight modes and environments

Key Contributions

1. Autonomous Navigation Systems: Developed path planning and navigation algorithms for autonomous drones, ensuring precise movement and obstacle avoidance.

2. Obstacle Avoidance: Implemented advanced obstacle avoidance algorithms using Intel RealSense cameras to enable safe and efficient navigation in dynamic environments.

3. 3D Mapping & Point Cloud Data: Utilized Lidar for 3D mapping and point cloud data collection, enabling accurate environmental modeling and improving autonomous navigation capabilities.

4. Drone Swarm Communication: Developed mesh network communication using ESP32 to enable real-time coordination and data exchange between multiple drones, optimizing swarm behavior.

5. Object Detection: Trained YOLO models for object detection to enhance the drone’s ability to detect and respond to obstacles in real-time.

6. Companion Computer Integration: Designed companion computers that interfaced with ArduPilot through the MAVLink protocol, enabling efficient communication and control between the drone’s flight controller and onboard systems

April 2021 - September 2022

Experience

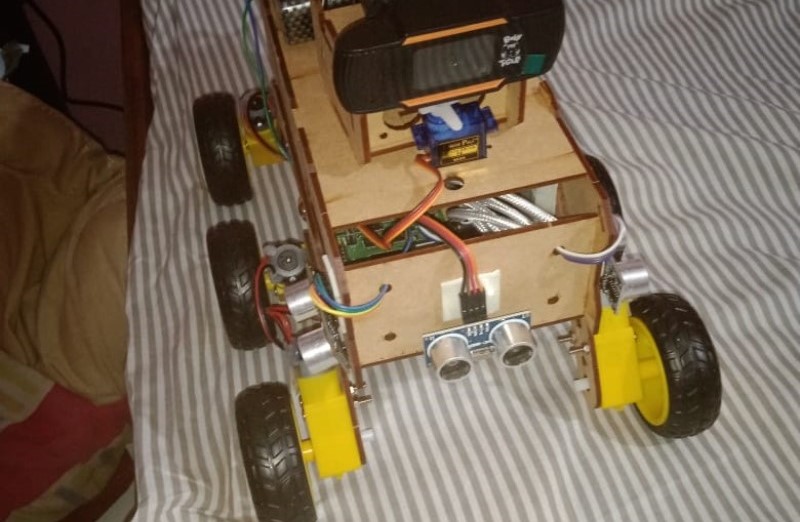

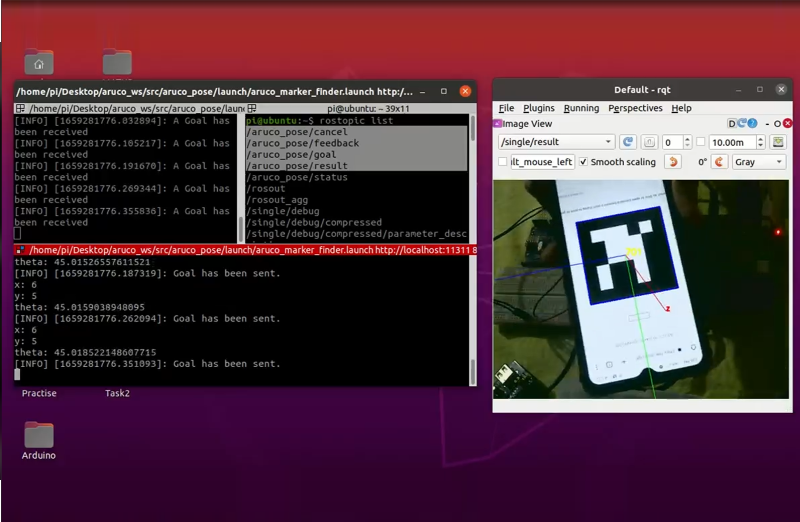

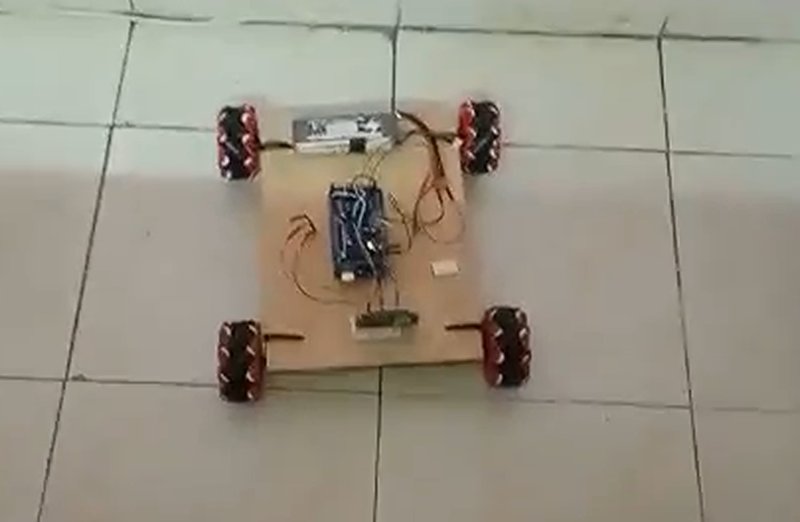

At ABA AIOT, I worked on a variety of robotics projects, focusing on ROS, SLAM, and navigation systems for autonomous platforms. My role involved both hardware and software development, integrating sensors, motors, and control systems to create robust and reliable robotic systems.

Key Contributions

1. Closed-Loop Motor Control: Developed closed-loop control systems for DC gear motors and BLDC motors using ROS on platforms like NVIDIA Jetson Nano, Teensy 4.1, and AVR boards, achieving precise control and enhanced system performance.

2. ROS Integration & Simulation: Created CAD models of robots, converted them into URDF format, and deployed them in Gazebo for simulation and testing, utilizing ROS plugins for control and sensor integration.

3. SLAM & Mapping: Implemented the RTAB-Map SLAM algorithm for 3D mapping and navigation, enabling autonomous robots to understand and navigate dynamic environments.

4. Sensor Integration & Data Communication: Integrated various sensors, including Intel RealSense cameras, radar systems, and Mecanum wheels, into the robotic systems for improved navigation and obstacle avoidance. Developed MQTT and ROS environments for communication between multiple Jetson Nano units.

5. Multi-Platform Development: Worked on custom interfaces between NVIDIA Jetson and microcontrollers, enhancing communication and data flow for multi-sensor and actuator control.

6. Embedded Systems Development: Contributed to the development of embedded systems and communication protocols, working with hardware platforms like Teensy 4.1 and ESP32 to create responsive and scalable robotic solutions.

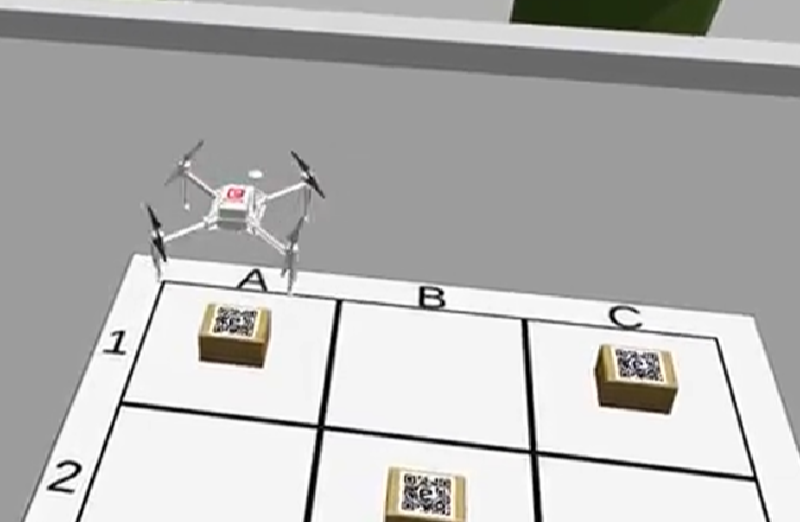

October 2020 - March 2021

E-Yantra

Experience

Theme Allotted- Vitarana Drone (Autonomous Drone)

Worked On

ROS , GAZEBO , OPENCV, QRCODE SCANING ,OBSTRACLE AVODING , PID CONTROLLER , PATH PLANNING , OBJECT TRACKING USING CAMERA.

Click here to see the certificate2017 - 2021

Guru Gobind Singh Indraparatha University, New Delhi

Completed my Bachelor Degree in Electrical and Electronic Engineering from Guru Gobind Singh University where I had secured 7.1 CGPA.

2015 - 2017

School of Secondary board

Completed my XIIth from Heera Public School, New Delhi.

Address

New Delhi, India

Phone

+91-8826885541, +971-581506365

sourabhtiwari10399@gmail.com